With twitter deteriorating by the day, there is a need for social media options. And, one of those options is Mastodon and the Fediverse. Unlike other social media where a person signs up for an account at a central repository (think Facebook, twitter, or LinkedIn), there is no single repository or instance of Mastodon. Rather, Mastodon’s only real organization is set of communication protocols called ActivityPub so that posts from one instance can migrate to users across other Mastodon communities/servers, what is called the Fediverse.

“Decentralization is a big part of Mastodon’s DNA and is at the forefront of our mission.”

There is general help for explaining Mastodon and for setting up accounts via buffer, Wired, EFF, and Tidbits.

As Mastodon is open source software created by “geeks,” there is some initial complexity in light of all the available options. So, no single help or set-up guidance is going to work for all possible users. And, as a working lawyer who has been extremely busy the past few years — ahem, unemployment — I personally have only scratched the surface here of what is happening at Mastodon.

But, as the set-up guides above indicate, the first thing to do when setting up a Mastodon account is to select a community — a Fediverse — to join. Because of my interest in open source software, I signed up for an account with fosstodon, an open source software community.

Besides common interests, the key issues for deciding your Fediverse community are knowing how that organization will behave and how it is structured, including its code of conduct, its server rules, and how you can support your community (as Mastodon is open source, these Fediverse communities are run by volunteers, so having a way to contribute your financial support to the maintainers of the Fediverse is vital). As an example, here is the about page for the fosstodon Fediverse.

Once a Fediverse community is selected, you then create a user-id and password for that Fediverse. As a result, handles on mastodon are actually in two parts:

On the web, this handle turns into:

For example, my handle is @vforberger@fosstodon.org, so my web address for my Mastodon account is https://fosstodon.org/@vforberger. And, I login to my Mastodon account by going to https://fosstodon.org/.

Just because you connect to one Fediverse community, however, does not mean your connections or the information being shared is restricted to that one community/server. Because the Fediverse is a networked collection of many, many communities/servers, posts or toots, are shared across the entire Fediverse (unless a Fediverse community has intentionally decided NOT to share information with another, specific Fediverse community — more on that issue below).

So, even though I am on fosstodon, I see the toots from numerous other people outside of the fosstodon community and I even subscribe or follow accounts/people on other Fediverse communities.

There is no algorithm with Mastodon

Because there is no corporate entity in search of profits, there is no algorithm running behind the scenes filling your feed with posts. As a result, your feed turns entirely on the Fediverse server with which you signed up (your “local” news in your Fediverse community) and the accounts/people that you follow (your “home” timeline).

If you only follow a few people or accounts, then your home timeline will not have many posts. If you follow a lot of people or accounts or a person or two who is a prolific poster (I’m looking at you, @lisamelton@mastodon.social), then you home timeline may have more information than you can possibly follow as a normal human being.

So, this advice from @growlbeast@mastodon.art is spot on:

- FOLLOW LOTS OF PEOPLE.

- follow hashtags you have an interest in.

- USE HASHTAGS when you post.

In place of initially searching for topics and people, you can find possible accounts to follow at this Fediverse directory.

Using Mastodon

On a computer, you use Mastodon usually through a web browser (but there are also now apps specific for Mastodon appearing for Windows, Apple, and Linux operating systems).

Just remember, because of the federated nature of Mastodon, you actually login into your specific service. For example, when I connect to my fosstodon account, I login at fosstodon.org.

There are official Mastodon apps on Android and iPhone. There are also numerous other apps that, frankly, offer even better experiences than the official smartphone apps (I use Ice Cubes, for instance). A search for mastodon andoid/iphone client app reviews will bring up numerous options for you. As others have already noted, Mastodon has become a playground for good computer app design. So, take advantage of these options.

Mastodon itself provides a list of Mastodon apps.

Mastodon controversies

Because you are part of a Fediverse server/community when you sign up, you are part of that community. If that community does not like what you post (i.e., there are complaints), you may find your account suspended temporarily or even permanently.

Note: Keep in mind that there is nothing preventing you from having multiple accounts and identities on the Fediverse. So, what may be problematic on one Fediverse may be entirely kosher for another Fediverse community. The trick is not to complain so much when one community may not like what you are posting about but to make sure your posts are right for the community/Fediverse from which you are tooting that particular information.

Because the Fediverse and Mastodon are open source, others are free to create their own Fediverse connections to the community. Facebook/Meta has hinted strongly at creating a twitter competitor based on Mastodon and the Fediverse, and rumors indicate a new app called Threads is slated to be released on July 6th of this year.

Note: Truth Social is another example of using the open source model of Mastodon to create a closed social network. Truth Social is essentially a single Fediverse community in disguise and closed off from communicating with other Fediverse communities.

Not everyone at Mastodon is happy with this Facebook/Meta connection to the Fediverse. Some Fediverse communities have vowed to disconnect from this Facebook/Meta community as soon as it tries to connect. Many are concerned that Facebook/Meta will simply use Fediverse accounts for data harvesting.

My own community — Fosstodon — has issued this sensible statement. In essence, it is a wait and see stance.

- As a team, we will review what the service is capable of and what advantages/disadvantages such a service will bring to the Fediverse

- We will then make a determination on whether we will defederate that service

- We will NOT jump on the bandwagon, or partake in the rumour mill that seems to be plaguing the Fediverse at the moment

It’s important to say that neither myself or Mike like anything that Facebook stands for. Neither of us use it, and both of us go to great lengths to avoid it when browsing the web. So if this service introduces any issues that could negatively impact our users, we will defederate.

However, we don’t know what this thing is yet. Hell, we don’t even know if this thing will actually exist yet. So let’s just wait and see.

What if this thing ends up being a service that can allow you to communicate with your friends who still use Facebook, via the Fedi, in a privacy respecting manner. That would be pretty cool, I think; especially when you consider that one of the main concerns with new users on the Fedi is that they can’t find their friends.

Finally, several former Twitter folks have been creating an independent, twitter-like service called Bluesky. What will happen with this effort remains to be seen, however.

Final thoughts on the Fediverse and Mastodon

There are some basic pieces of information everyone should understand about Mastodon, First, there are no confidential communications on Mastodon. Everything is open and public, including messages from one user to another. So, for lawyers there is no way to communicate confidentially with clients or anyone else on Mastodon.

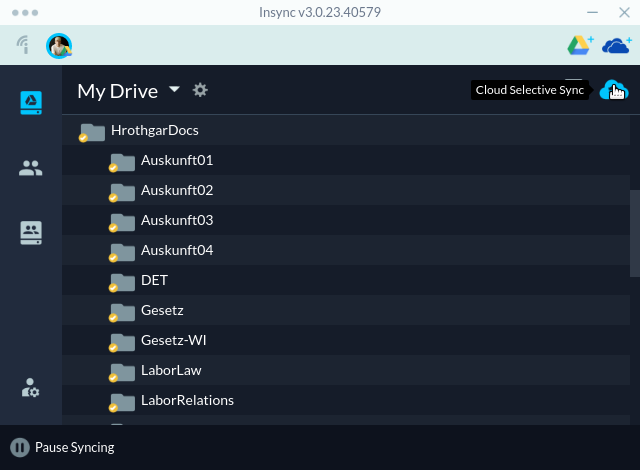

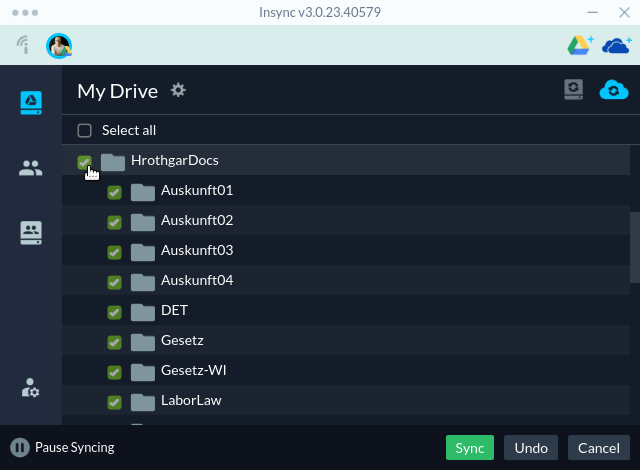

Second, organizations should create their own Fediverse communities. Given the federated nature of Mastodon, it seems natural that corporations and organizations will turn to the Fediverse to take control of their communities. The problems of Twitter demonstrate, if nothing else, that having your social media presence in the hands/whims of another entity is highly problematic both in the short term (having to fight misinformation) and even more so in the long term (losing any meaningful audience and participation by your “members”).

The state bar for Wisconsin, for example, could create a Fediverse server for its members (and so, making the choice of which Fediverse to sign up with an easy one). This Fediverse, then, could serve as a mechanism for the state’s legal community to discuss and debate the legal issues of this states as well as present news of issues as they develop, much as twitter once did.

Note: There is already a general lawyer community at @Esq.social. And, Lawprofblawg has been providing much needed laughs of late.

Third, general, mainstream news is still lacking on the Fediverse. A few news organizations/reporters have already taken to Mastodon — e.g., WGBH in Boston, Eric Gunn of Wisconsin Examiner, and Charlie Savage of NYTimes. But, the move from twitter has been spotty at best, and the initial push into Mastodon in late 2022 has not materialized into an active news-feed that had previously been occurring on twitter. I suspect there will be a bigger push into Mastodon as twitter further declines.

Fourth, account verification requires your control of a website. Rather, than having a central entity verify a person’s identity, verification is handled by posting some specific html code — a rel=me tag — in a website you control. This process makes sense because the identity and verification are simply based on what is already public accessible information. So, my fosstodon identity is verified because I have placed the needed tag on websites I control, something that a person who is NOT me presumably can not do.

This information is the Fediverse in a nutshell. It is very different from the social media before it, but it is the model of social media to come. Do some exploring when you have the chance and join the club.